About Penelope

Penelope is a cloud-based, open and modular platform that consists of tools and techniques for mapping landscapes of opinions expressed in online (social) media. The platform is used for analysing the opinions that dominate the debate on certain crucial social issues, such as immigration, climate change and national identity.

The Penelope ecosystem groups of a large variety of components and interfaces that connect to each other through web APIs. These components and interfaces can serve many different functions, including:

- Components for gathering data, e.g. from databases or via the API of social media sites

- Components for analysing data, e.g. natural language processing, network analysis or dimensionality reduction tools

- Components for visualising data, e.g. tools for visually plotting data or insights from analyses

- Interfaces that allow to use Penelope components without the need to program, e.g. visual programming workbenches

- Interfaces for providing insight in particular topics, e.g. a climate change observatory

- …

Penelope components

Each component is a web service that is hosted somewhere on the internet, and is accessible via a web API. This means that a user can use HTTP requests to send input to the component and receives the result via an HTTP response. Tools for sending such requests are readily available in most programming languages as well as in command line tools (e.g. cURL). The only constraint on Penelope components is that all data is send via POST requests using the JSON format.

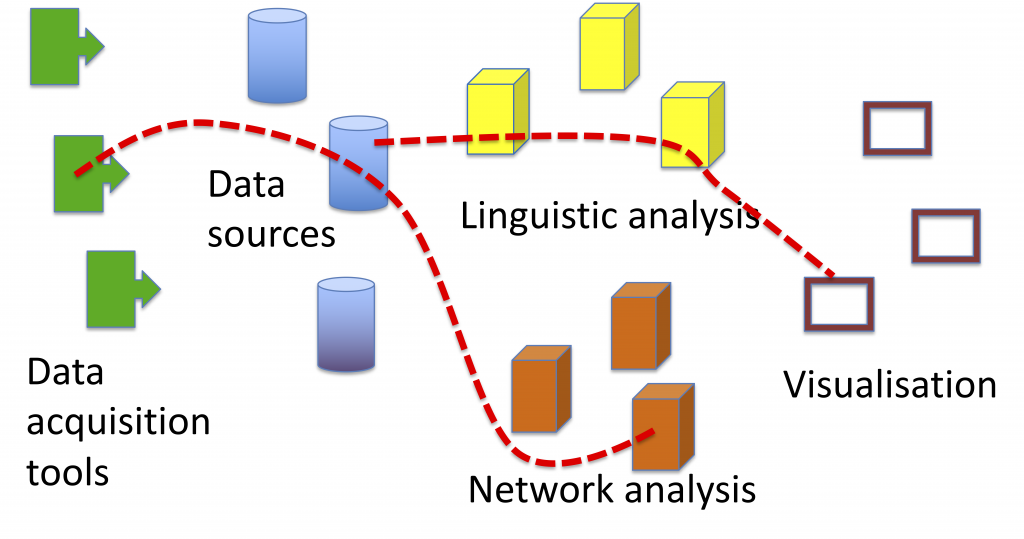

One of the main ideas behind the Penelope platform, is that users can freely combine existing components to achieve new analyses. This can for example be done by chaining together a component for gathering twitter data, a component for performing social network analysis and a component for visualising the results of this analysis. Such a chain is called a pipeline. The chaining of individual components into pipelines is visualised below.

A list of components that are currently available can be found here. For contributing a component or integrating an existing web service as a component into Penelope, click here.

Using a component

Penelope components are implemented as web services in the RESTful architectural style. These services listen to HTTP requests, and answer with HTTP responses. This architecture ensures that Penelope components can directly be used from almost any programming language or programming environment (e.g. Python, R, java, C++, Common Lisp, cURL, Javascript) and that they can be easily embedded in user graphical user interfaces, such as front-end websites.

We now proceed to an actual example of how an actual Penelope component can be used. The component that is featured here is a tokenization tool, hosted by the EHAI team of the VUB AI-Lab. The function of the tool is to split a sentence into individual words. Let us first have a look at the specification of the component itself:

- The endpoint of the component is https://penelope.vub.be/spacy-api/tokenize. This means that the component listens to requests that arrive at this url.

- The method of the request is POST. Only requests of this kind will be answered positively.

- The data needs to be send in a JSON format.

- The data object needs to include a key “sentence” with as value the sentence to tokenize, and a key “model” with as value the code of the language model to be used (en, de, es, pt, fr, it, nl).

We will show examples of how to use this tokenization tool from Python, R and Common Lisp. In each programming language, we will first import the necessary libraries for handling http requests and, if necessary, for manipulating JSON data. Then, we will implement a function that takes as input a sentence and a language code and that returns as output a list containing the words that occur in the sentence. Finally, we make a call to the function to tokenize a concrete sentence. For testing, you can just copy paste the following code snippets into a Python, R or Common Lisp console respectively.

Python

# Import this package

import requests

# Define function that uses penelope

def run_penelope_tokenizer (string,language):

data = {'model':language, 'sentence':string}

headers = {'content-type':'application/json'}

response = requests.post("https://penelope.vub.be/spacy-api/tokenize", json=data, headers=headers)

return(response.text)

# Now you can call the function

run_penelope_tokenizer("Hello World!","en")

R

# Install and import this package

library(httr)

# Define function that uses penelope

run_penelope_tokenizer <- function (string,language)

{

response <- POST("https://penelope.vub.be/spacy-api/tokenize", encode="json",

body = list(model = language, sentence = string))

return (content(response))

}

# Now you can call the function

run_penelope_tokenizer("Hello World!","en")

Common Lisp

;; Install and import packages

(ql:quickload :dexador)

(ql:quickload :cl-json)

;; Define function that uses penelope

(defun run_penelope_tokenizer (string language)

(let ((response

(dex:post "https://penelope.vub.be/spacy-api/tokenize"

:headers '(("Content-Type" . "application/json"))

:content (cl-json:encode-json-alist-to-string

`(("model" . ,language)

("sentence" . ,string))))))

(cl-json:decode-json-from-string response)))

;; Now you can call it

(run_penelope_tokenizer "Hello World!" "en")

Penelope interfaces

While Penelope components are defined by their web APIs, and are thus typically used by computer programs, Penelope interfaces are designed to be used directly by end-users (e.g. scientists, journalists or the general public). Typically, the interfaces take input from the user, process the input using a pipeline of components and return a visualisation of the results. Interfaces can be general-purpose, or highly specialised towards a particular data source, kind of analysis, or topic. General-purpuse interfaces allow users to chain together existing components into novel data analysis pipelines, without the need for extensive programming. More specialised interfaces can take the form of opinion observatories, that provide insight into a particular topic of interest.

A list of available interfaces is available here. For contributing an interface, click here.